Starting on RNNs

Mar 28, 2014

A first attempt at training RNNs

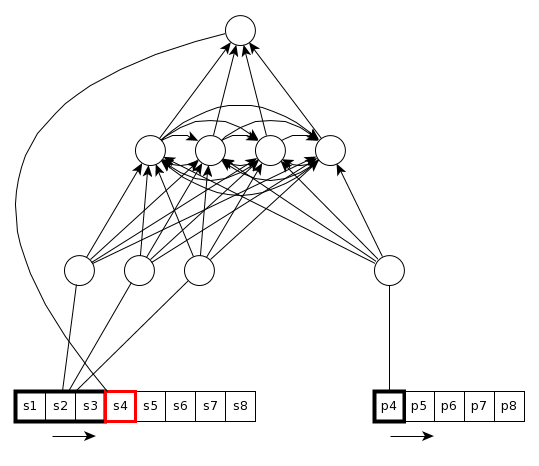

This week I focused on training an RNN to solve our task. The RNN’s structure is really simple: it maps the k previous samples and the phone of the sample to predict to a recurrent hidden layer, which itself linearly maps to the output. The input is a sliding window of fixed length over the sequence and the phones information.

For starters, I’m interested in overfitting a single utterance, i.e. given the first k samples of the sequence and a sequence of phone information, I’d like to be able to perfectly reconstruct the whole sequence. I trained my toy RNN model using this script and then compared the original sequence with two types of reconstructions:

- the reconstruction you get when sequentially predictiong the next sample using the ground truth as the k previous samples and the phone information

- the reconstruction you get when sequentially predictiong the next sample using the previously-predicted samples as the k previous samples and the phone information

Here are the audio files:

Original:

Ground-truth-based reconstruction:

Prediction-based reconstruction:

For reference, the model converges to a 0.426 mean squared error, although this number cannot be compared with other experiments. As you can see, although the model isn’t that bad for ground-truth-based reconstruction, it performs very poorly when the only information available is the k first samples of the sequence and the phone information.

Note that I haven’t tried to apply the good practice recommendations for RNNs (i.e. gradient clipping and regularization) yet; for now I was interested in running a quick experiment and making sure my code and scripts were working properly.

One interesting thing I noticed was that I had to keep the number of recurrent hidden units quite low (in the order of 100 units), otherwise the error would start to go up during training (is there an exploding gradient effect at play when increasing the number of hidden units?).

Next week I’d like to implement regularization and gradient clipping techniques in my toy RNN and see if it improves results.

Share